Intro

I have an Intel Arc A380 in my Dell T440 server for Plex and remote gaming through Sunshine. I set up Hoarder and wanted a way to use the AI features without paying for OpenAI tokens. This proved extremely difficult, as none of the readily available solutions seemed to work.

This was until I tried LMStudio. LMStudio worked out of the box, but launching it in a headless manner on boot is not possible (at least for me), not to mention the incompatibility of its OpenAPI implementation with Hoarder. I later learned that LMStudio uses a vulkan backend, and went on a quest to find an ollama version with vulkan support.

Ollama does not currently support Vulkan and it looks like it won’t any time soon, (read about that here) but there is an actively developed fork by @Whyvl, ollama-vulkan.

Below are the steps needed to build and run ollama with a vulkan backend.

Pre-requisites

I used an Ubuntu 24.04 (Noble Numbat) host, so your mileage may vary under different distros.

To install the required packages, issue the following commands:

sudo apt update

sudo apt install git cmake wget libcap-dev golang-go To install the vulkan-sdk, you need to run:

wget -qO- https://packages.lunarg.com/lunarg-signing-key-pub.asc | sudo tee /etc/apt/trusted.gpg.d/lunarg.asc

sudo wget -qO /etc/apt/sources.list.d/lunarg-vulkan-noble.list http://packages.lunarg.com/vulkan/lunarg-vulkan-noble.list

sudo apt update

sudo apt install vulkan-sdkYou also need this file: fix-vulkan-building.patch. Save it somewhere easily accessible (like your downloads folder).

Setting up the repo

Next, you need to set up the repositories and apply the patch you downloaded. Use:

git clone -b vulkan https://github.com/whyvl/ollama-vulkan.git

cd ollama-vulkan

git remote add ollama_vanilla https://github.com/ollama/ollama.git

git fetch ollama_vanilla --tags

# the latest release as of now is 0.5.11

git checkout tags/v0.5.11 -b ollama_vanilla_stable

git checkout vulkan

git merge ollama_vanilla_stable --allow-unrelated-histories --no-edit

patch -p1 -N < <path to patch file>Building Ollama

After downloading the repo and applying the patches, its time to build!

Run:

make -f Makefile.sync clean sync

# CPU libraries

cmake --preset CPU

cmake --build --parallel --preset CPU

cmake --install build --component CPU --strip

# Vulkan libraries

cmake --preset Vulkan

cmake --build --parallel --preset Vulkan

cmake --install build --component Vulkan --strip

# Binary

source scripts/env.sh || true

mkdir -p dist/bin

go build -trimpath -buildmode=pie -o dist/bin/ollama .Once the build is finished, run the server with:

OLLAMA_HOST=0.0.0.0:11434 ./dist/bin/ollama serveIf you get the error “Please enable CAP_PERFMON or run as root to use Vulkan.”, you have to run the binary as root.

Automated Script

If you don’t want to run all the steps manually, you can use this script:

#!/bin/bash

export CGO_ENABLED=1

export LDFLAGS=-s

# Pre-requisites

wget -qO- https://packages.lunarg.com/lunarg-signing-key-pub.asc | sudo tee /etc/apt/trusted.gpg.d/lunarg.asc

sudo wget -qO /etc/apt/sources.list.d/lunarg-vulkan-noble.list http://packages.lunarg.com/vulkan/lunarg-vulkan-noble.list

sudo apt update

sudo apt install -y git vulkan-sdk cmake wget libcap-dev golang-go

mkdir -p /tmp/patches

wget -O /tmp/patches/0002-fix-fix-vulkan-building.patch https://github.com/user-attachments/files/18783263/0002-fix-fix-vulkan-building.patch

# Downloading repos and applying patches

echo "Cloning and setting up ollama-vulkan repository..."

REPO_DIR="/tmp/ollama-vulkan-git"

rm -rf "${REPO_DIR}" 2>/dev/null || true

git clone -b vulkan https://github.com/whyvl/ollama-vulkan.git "${REPO_DIR}"

cd "${REPO_DIR}"

git remote add ollama_vanilla https://github.com/ollama/ollama.git

git fetch ollama_vanilla --tags

git checkout tags/v0.5.11 -b ollama_vanilla_stable

git checkout vulkan

git merge ollama_vanilla_stable --allow-unrelated-histories --no-edit

patch -p1 -N < /tmp/patches/0002-fix-fix-vulkan-building.patch

make -f Makefile.sync clean sync

# Building

echo "Building CPU variant..."

cmake --preset CPU

cmake --build --parallel --preset CPU

cmake --install build --component CPU --strip

echo "Building Vulkan variant..."

cmake --preset Vulkan

cmake --build --parallel --preset Vulkan

cmake --install build --component Vulkan --strip

echo "Building final ollama binary..."

cd "${REPO_DIR}"

source scripts/env.sh || true

mkdir -p dist/bin

go build -trimpath -buildmode=pie -o dist/bin/ollama .

# Done!

echo "Build completed."

echo "CPU libraries are in: ${REPO_DIR}/dist/lib/ollama"

echo "Vulkan libraries are in: ${REPO_DIR}/dist/lib/ollama/vulkan"

echo "ollama binary is in: ${REPO_DIR}/dist/bin/ollama"Done!

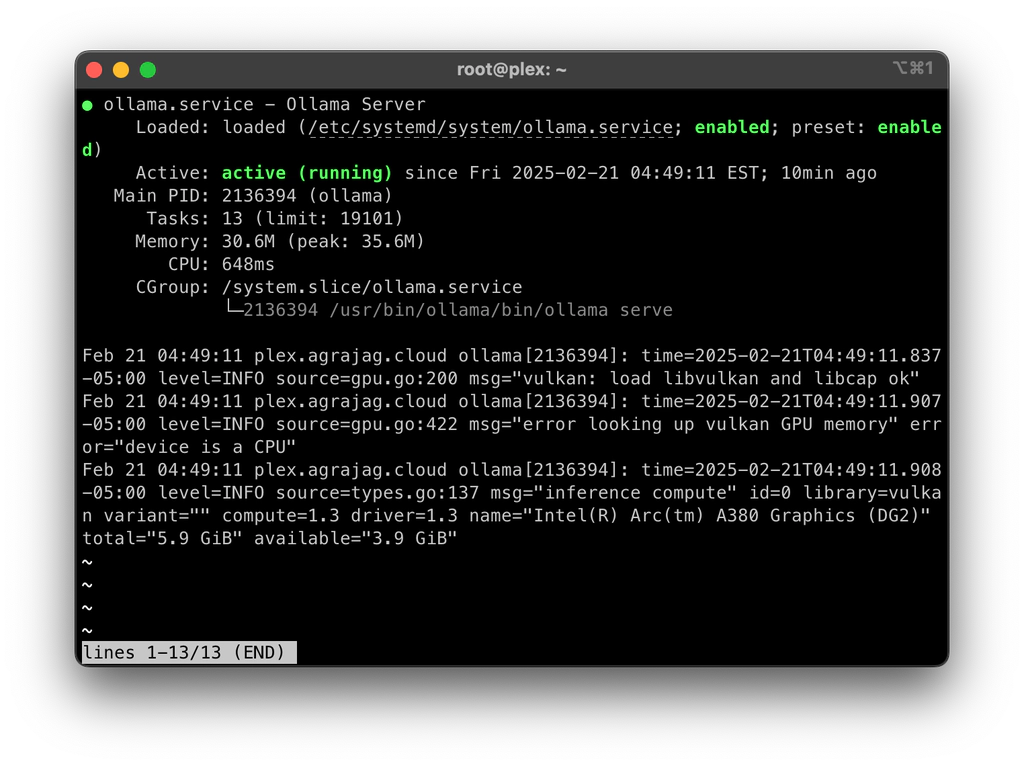

You should now be able to load models using vulkan, leveraging your Intel GPU.

Resources

Special thanks to @McBane87 for a DockerFile that helped me build the project locally and to @Dts0 for the patch.